If you already know the meaning of the Cohen’s kappa and how to interpret it, go directly to the calculator.

What is Cohen’s kappa?

The Cohen’s kappa is a statistical coefficient that represents the degree of accuracy and reliability in a statistical classification. It measures the agreement between two raters (judges) who each classify items into mutually exclusive categories.

This statistic was introduced by Jacob Cohen in the journal Educational and Psychological Measurement in 1960.

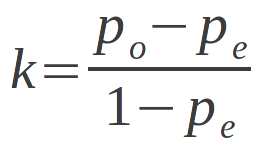

where po is the relative observed agreement among raters, and pe is the hypothetical probability of chance agreement.

The calculation of the kappa is useful also in meta-analysis during the selection of primary studies. It can be measured in two ways:

- inter-rater reliability: it is to evaluate the degree of agreement between the choices made by

two ( or more ) independent judges - intra-rater reliability: It is to evaluate the degree of agreement shown by the same

person at a distance of time

Interpret the Cohen’s kappa

To interprete your Cohen’s kappa results you can refer to the following guidelines (see Landis, JR & Koch, GG (1977). The measurement of observer agreement for categorical data. Biometrics, 33, 159-174):

- 0.01 – 0.20 slight agreement

- 0.21 – 0.40 fair agreement

- 0.41 – 0.60 moderate agreement

- 0.61 – 0.80 substantial agreement

- 0.81 – 1.00 almost perfect or perfect agreement

kappa is always less than or equal to 1. A value of 1 implies perfect agreement and values less than 1 imply less than perfect agreement.

It’s possible that kappa is negative. This means that the two observers agreed less than would be expected just by chance.

Use the free Cohen’s kappa calculator

With this tool you can easily calculate the degree of agreement between two judges during the selection of the studies to be included in a meta-analysis.

Complete the fields to obtain the raw percentage of agreement and the value of Cohen’s kappa.

Results

% of agreement: NaN%

Cohen’s k: NaN